Instagram and Facebook will start censoring ‘graphic images’ of self-harm

In light of a recent tragedy, Instagram is updating the way it handles pictures depicting self-harm. Instagram and Facebook announced changes to their policies around content depicting cutting and other forms of self-harm in dual blog posts Thursday.

The changes come about in light of the suicide of a 14-year-old girl named Molly Russell, a U.K. resident who took her own life in 2017. Following her death, her family discovered that Russell was engaged with accounts that depicted and promoted self-harm on the platform.

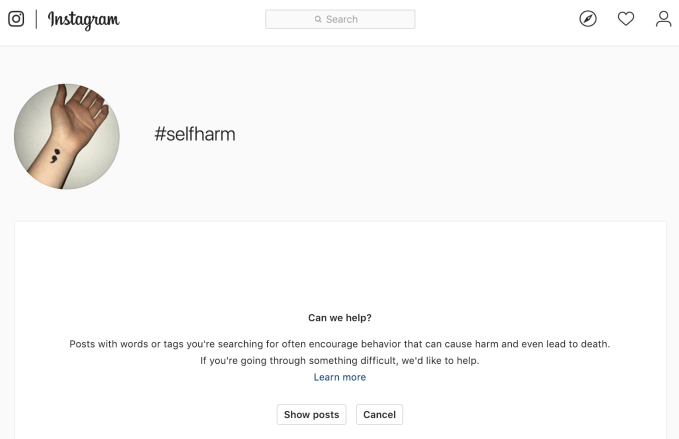

As the controversy unfolded, Instagram Head of Product Adam Mosseri penned an op-ed in the Telegraph to atone for the platform’s at times high-consequence shortcomings. Mosseri previously announced that Instagram would implement “sensitivity screens” to obscure self-harm content, but the new changes go a step further.

Starting soon, both platforms will no longer allow any “graphic images of self-harm” most notably those that depict cutting. This content was previously allowed because the platforms worked under the assumption that allowing people to connect and confide around these issues was better than the alternative. After a “comprehensive review with global experts and academics on youth, mental health and suicide prevention,” those policies are shifting.

“… It was advised that graphic images of self-harm – even when it is someone admitting their struggles – has the potential to unintentionally promote self-harm,” Mosseri said.

Instagram will also begin burying non-graphic images about self-harm (pictures of healed scars, for example) so they don’t show up in searches, relevant hashtags or on the explore tab. “We are not removing this type of content from Instagram entirely, as we don’t want to stigmatize or isolate people who may be in distress and posting self-harm related content as a cry for help,” Mosseri said.

According to the blog post, after consulting with groups like the Centre for Mental Health and Save.org, Instagram tried to strike a balance that would still allow users to express their personal struggles without encouraging others to hurt themselves. For self-harm, like disordered eating, that’s a particularly difficult line to walk. It’s further complicated by the fact that not all people who self-harm have suicidal intentions, and the behavior has its own nuances apart from suicidality.

“Up until now, we’ve focused most of our approach on trying to help the individual who is sharing their experiences around self-harm. We have allowed content that shows contemplation or admission of self-harm because experts have told us it can help people get the support they need. But we need to do more to consider the effect of these images on other people who might see them. This is a difficult but important balance to get right.”

Mental health research and treatment teams have long been aware of “peer influence processes” that can make self-destructive behaviors take on a kind of social contagiousness. While online communities can also serve as a vital support system for anyone engaged in self-destructive behaviors, the wrong kind of peer support can backfire, reinforcing the behaviors or even popularizing them. Instagram’s failure to sufficiently safeguard for the potential impact this kind of content can have on a hashtag-powered social network is fairly remarkable considering that both Instagram and Facebook claim to have worked with mental health groups to get it right.

These changes are expected in the “coming weeks.” For now, a simple search of Instagram’s #selfharm hashtag still reveals a huge ecosystem of self-harmers on Instagram, including self-harm-related memes (some hopeful, some not) and many very graphic photos of cutting.

“It will take time and we have a responsibility to get this right,” Mosseri said. “Our aim is to have no graphic self-harm or graphic suicide related content on Instagram… while still ensuring we support those using Instagram to connect with communities of support.”

from TechCrunch https://tcrn.ch/2BoCtm6

No comments: